Straight from the Desk

Syz the moment

Live feeds, charts, breaking stories, all day long.

- All

- equities

- United States

- Macroeconomics

- Food for Thoughts

- markets

- Central banks

- Fixed Income

- bitcoin

- Asia

- europe

- investing

- geopolitics

- gold

- technical analysis

- Commodities

- Crypto

- AI

- Technology

- nvidia

- ETF

- earnings

- Forex

- china

- Real Estate

- oil

- banking

- Volatility

- energy

- magnificent-7

- apple

- Alternatives

- emerging-markets

- switzerland

- tesla

- United Kingdom

- Middle East

- assetmanagement

- amazon

- microsoft

- russia

- ethereum

- ESG

- meta

- Industrial-production

- bankruptcy

- Healthcare

- Turkey

- Global Markets Outlook

- africa

- Market Outlook

- brics

- performance

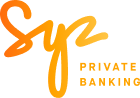

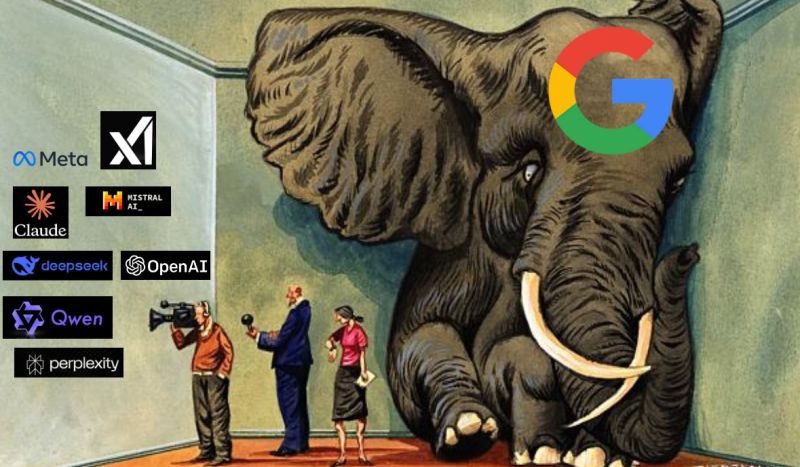

It now looks as if the race between Microsoft and Google for the best AI strategy has been decided

Source: HolgerZ, Bloomberg

🚨 $GOOGL co-founders Larry Page and Sergey Brin are now the 2nd and 3rd richest people on the planet.

Yes, Google just leap-frogged nearly everyone. According to Bloomberg’s latest rankings, here’s the current Top 10 richest people in the world: 1️⃣ Elon Musk — $442B 2️⃣ Larry Page — $276B 3️⃣ Sergey Brin — $258B 4️⃣ Larry Ellison — $254B 5️⃣ Jeff Bezos — $251B 6️⃣ Mark Zuckerberg — $225B 7️⃣ Bernard Arnault — $196B 8️⃣ Steve Ballmer — $166B 9️⃣ Michael Dell — $155B 🔟 Jensen Huang — $155B The Google founders jumping to #2 and #3 is a reminder of one thing: AI isn’t just reshaping technology, it’s reshaping the leaderboard of global wealth in real time. Source: Evan

Alphabet Google's forward PE looks like a meme stock. Nearly doubled off the low. 👇

Source: Matt Cerminaro @mattcerminaro

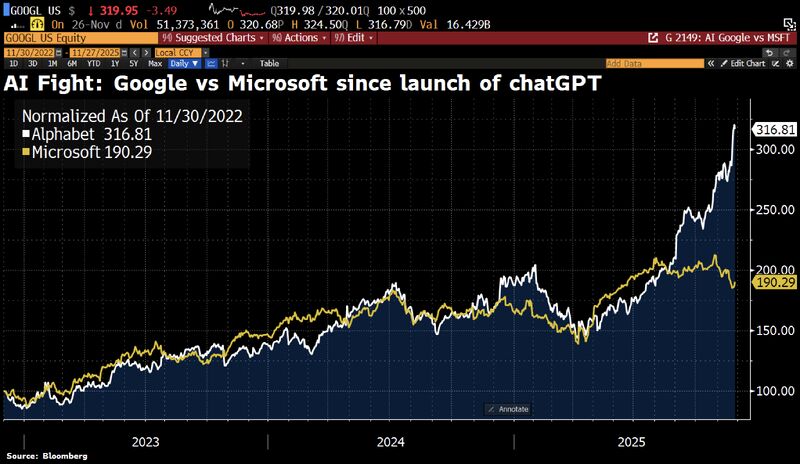

A great post and chart by @AndreasSteno on X: It's not an AI scare. It's an OpenAI scare.

The "Google bets" basket (Alphabet, Broadcom and Celestica) just hit a new ATH while the "Open AI" basket (Nvidia, Softbank & Microsoft) has been hut hard since the end of October. Source: Steno Research, Macrobond, Bloomberg

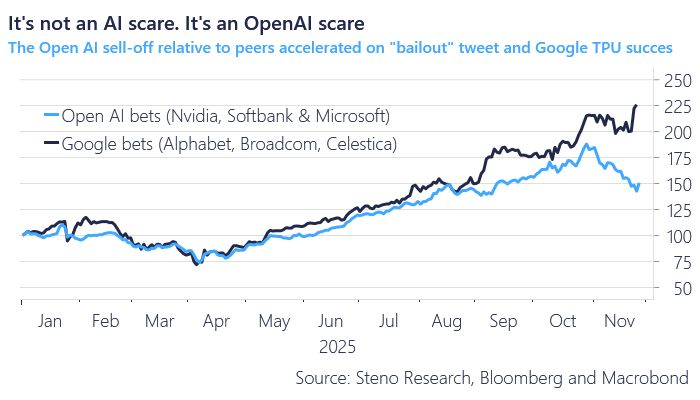

Here are the key takeaways from a great post by Kris Patel on X: 🔥 $GOOGL vs. $NVDA — The Market is mispricing the AI War.

Everyone’s arguing about who has the fastest chip. But that’s not the real disruption. Google isn’t trying to beat Nvidia on speed. Google is trying to beat Nvidia on economics. Here’s the overlooked truth: 1️⃣ The “Nvidia Tax” Nvidia sells to hyperscalers with 70%+ margins. AWS, Azure, and everyone else pay it, and pass it on to you. 2️⃣ Google plays a different game They’re the only hyperscaler that doesn’t need to profit from selling chips. They build TPUs at cost and control the entire stack: Chip → Interconnect → Data Center → Cloud. No margin stacking. No tax. 3️⃣ Training ≠ Inference Training = Ferrari (Nvidia dominates). Inference = Semi-truck (cheap, reliable, scaled). As AI matures, ~90% of spend shifts to inference. And here’s the bombshell: 👉 If Google drives cost-per-token toward zero with TPUs + aggressive cloud pricing… Raw speed stops mattering. Economics wins. Nvidia is selling generators. Google is building the electric grid. Cheap compute + massive distribution = 🏰 Empire. Source: Kris Patel

Nvidia responds to news of Meta using Google's TPUs, sending $NVDA stock -6% lower:

Nvidia says they are "delighted by Google's success" and they "continue to supply Google." They also say, "Nvidia is a generation ahead of the industry" and "offers greater performance, versatility, and fungibility." The AI wars are heating up. Source: The Kobeissi Letter

Investing with intelligence

Our latest research, commentary and market outlooks