Straight from the Desk

Syz the moment

Live feeds, charts, breaking stories, all day long.

- All

- equities

- United States

- Macroeconomics

- Food for Thoughts

- markets

- Central banks

- Fixed Income

- bitcoin

- Asia

- europe

- investing

- geopolitics

- gold

- technical analysis

- Commodities

- Crypto

- AI

- Technology

- nvidia

- ETF

- earnings

- Forex

- china

- Real Estate

- oil

- banking

- Volatility

- energy

- magnificent-7

- apple

- Alternatives

- emerging-markets

- switzerland

- tesla

- United Kingdom

- Middle East

- assetmanagement

- amazon

- microsoft

- russia

- ethereum

- ESG

- meta

- Industrial-production

- bankruptcy

- Healthcare

- Turkey

- Global Markets Outlook

- africa

- Market Outlook

- brics

- performance

The AI action is in Asia

See below chart with SK Hynix, Samsung or Advantest all sky rocketing. Meanwhile, Nvidia $NVDA (below line in yellow) remains stuck Source: LESG, TME

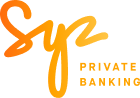

"A pair trade for the AI transition: long API / short slides"

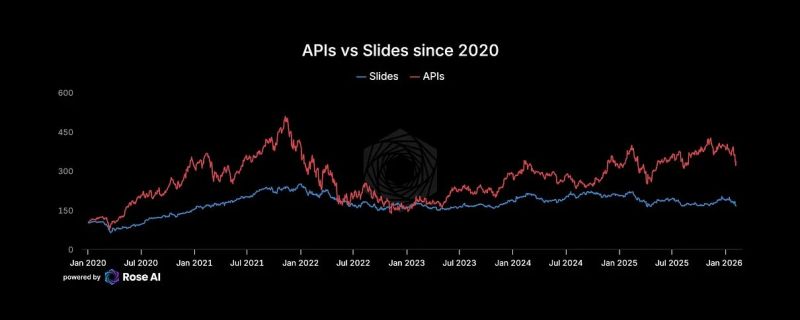

🚨 The "Software is Dead" Narrative is Wrong. You’re Just Looking at the Wrong Software. The market is panicking. The $IGV hashtag#etf is down 30%. The headlines say AI is writing code now, so software companies are toast. 📉 They’re making a massive Category Error. If you're investing without looking at the "plumbing," you're missing the biggest bifurcation of the decade. Here is how the "Singularity" is actually playing out: 1. The Victim: Human-UI SaaS (Type 1) 🖱️ If your software requires a human to stare at a dashboard for 8 hours, you have a target on your back. The Logic: AI agents replace humans. One less Customer Service rep = one less Zendesk seat. One less PM = one less Monday.com seat. The Result: Seat-based SaaS compresses as headcount shrinks. 2. The Winner: Bot-Infrastructure (Type 2) 🤖 AI agents don't have eyes. They use APIs. They don't click; they call. The Logic: One human generates a few clicks an hour. One AI agent generates thousands of API calls per minute. The Winners: The "Tollbooth Operators"—Okta, MongoDB, Snowflake, Datadog. They don't care if the user is a human or a bot; they charge per unit of consumption. Bots consume orders of magnitude more than we do. 🪦 The Real Casualty: The "Body Shops" The IT outsourcing model (Infosys, Wipro, Cognizant) is built on Labor Arbitrage. Hire for $15/hr in Bangalore, bill for $80/hr in NYC. The Problem: AI makes labor arbitrage worthless. You can’t get cheaper than "nearly free." The Proof: India's Big 4 are already cutting thousands of heads. The hiring machine has stopped. 🛑 The Bottom Line: The market is selling "Technology" as a monolith. This is a mistake. AI replaces Road Workers (IT services/Human-UI). AI pays Tolls (Infrastructure/APIs). The Play: Buy the dip in APIs. Short the slides. The infrastructure layer is the only place to hide when the bots take over.

The Most Important Investing Theme of 2026 is HALO

HALO stands for Heavy Assets, Low Obsolescence. These are undistruptible companies from an AI standpoint. There’s nothing Sundar Pichai and Sam Altman can take from them Source: Ritholtz

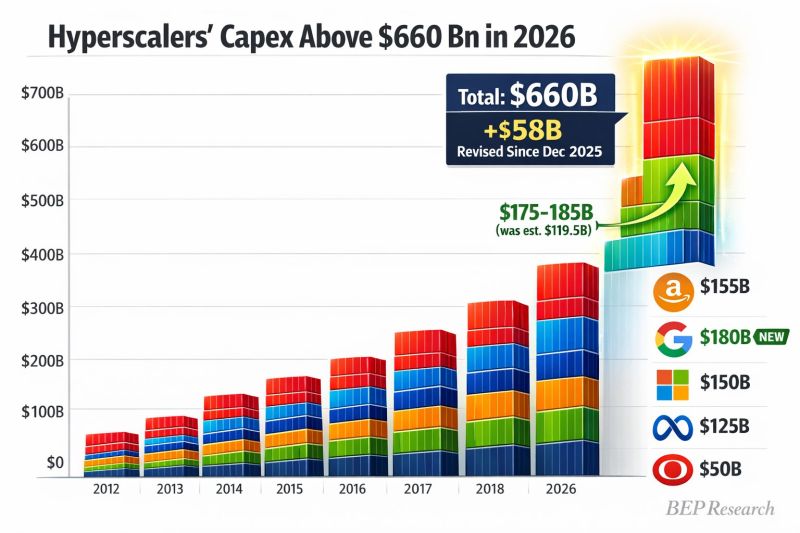

Hyperscaler capex just got revised UP $58B to $660B for 2026

Alphabet dropped a BOMB: $175-185B guidance vs $119.5B estimate That's +$55B from Google alone The AI infrastructure arms race just went nuclear ☢️ Source: Ben Pouladian @benitoz

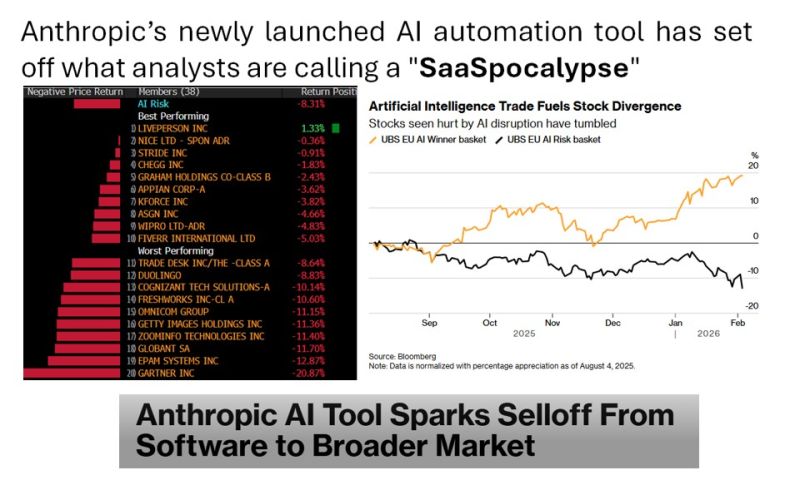

Anthropic’s newly launched AI automation tool has set off what analysts are calling a "SaaSpocalypse", rattling global technology markets

Anthropic recently released 11 new plug-ins for its Claude Cowork agent, an agentic, no-code AI assistant designed for enterprise users. The tool is aimed at automating tasks across legal, sales, marketing and data analysis functions Vishwa Sharan @vmsharan_ "Anthropic latest AI tool can automate tasks in legal, sales, marketing, and data analysis. Routine work such as document review, compliance tracking, risk flagging, and data processing. It essentially targets professionals in service automation. Expect reduced demand for consulting engagements, lesser billing hours. IT firms may see slower headcount growth or reductions as AI handles clerical work, shifting focus to higher level AI oversight and innovation" Source: Bloomberg

The new merger between xAi and SpaceX means the following are all under one company

The new merger between xAi and SpaceX means the following are all under one company: - SpaceX rockets, with 90% market share of launching mass into orbit. - Starlink, the only global telecommunications system. - Starshield, another version of Starlink for the US military which enables the US military to communicate around the planet without using the telecomm systems of any other country. - xAi, Grok - X (formerly Twitter) Source: Sawyer Merritt @SawyerMerritt WallStreetMav (@Wall Street Mav)

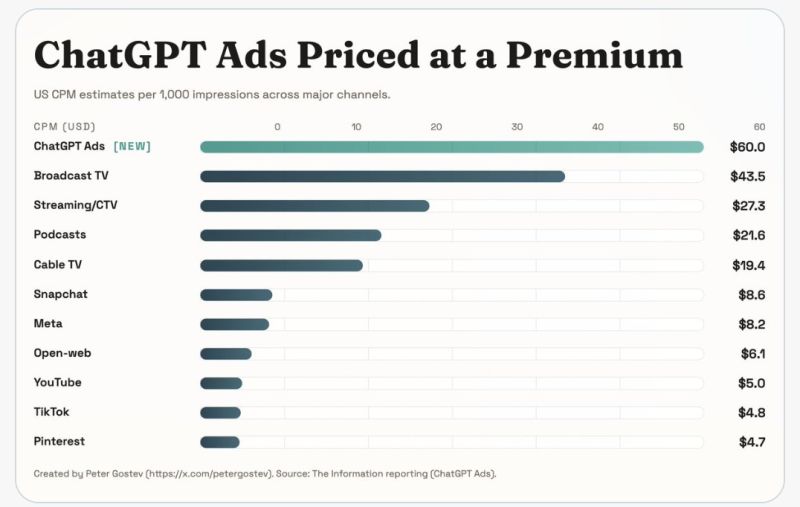

According to The Information, ChatGPT ads are being priced at $60 per 1000 impressions - which is way above other digital ads, even above TV/Streaming

Source: Peter Gostev (in SF 2-6 Feb) @petergostev

Investing with intelligence

Our latest research, commentary and market outlooks