Straight from the Desk

Syz the moment

Live feeds, charts, breaking stories, all day long.

- All

- equities

- United States

- Macroeconomics

- Food for Thoughts

- markets

- Central banks

- Fixed Income

- bitcoin

- Asia

- europe

- investing

- geopolitics

- gold

- technical analysis

- Commodities

- Crypto

- AI

- Technology

- nvidia

- ETF

- earnings

- Forex

- china

- Real Estate

- oil

- banking

- Volatility

- energy

- magnificent-7

- apple

- Alternatives

- emerging-markets

- switzerland

- tesla

- United Kingdom

- Middle East

- assetmanagement

- amazon

- microsoft

- russia

- ethereum

- ESG

- meta

- Industrial-production

- bankruptcy

- Healthcare

- Turkey

- Global Markets Outlook

- africa

- Market Outlook

- brics

- performance

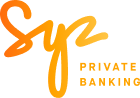

The day the AI bubble implodes, let's keep this in mind

Yes it happened Source: Jeff Weniger

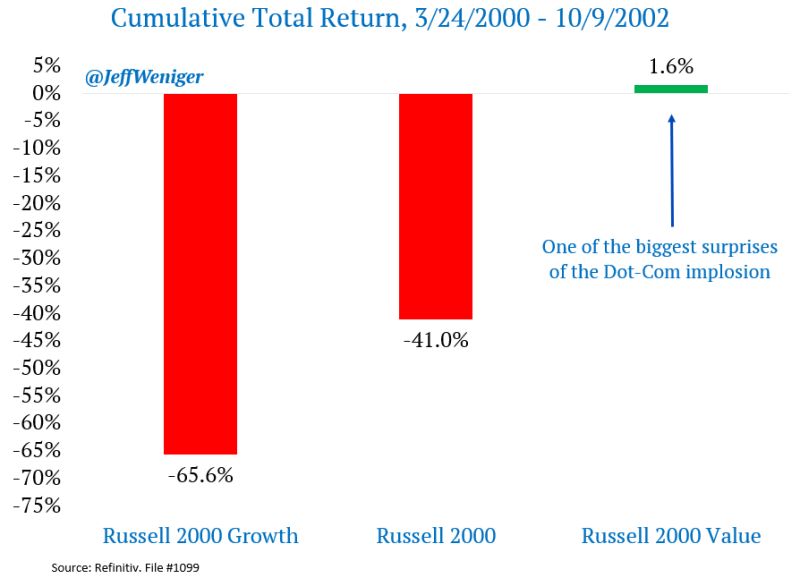

A $100B "Santa Rally" might have arrived via the UAE sovereign wealth funds

Here is the breakdown of what is going on: 1. The $100 Billion Life Raft 💰 OpenAI is reportedly looking to raise a staggering $100B (Source: WSJ). With a target valuation of $830B, this isn't just a fundraising round—it’s a geopolitical event. Sam Altman isn't just looking for "growth capital"; he’s securing a bridge to 2030 profitability. 2. From Debt to Equity 📉 Private credit markets (like Blue Owl) have been tightening the taps on AI infrastructure. OpenAI is pivoting from cheaper debt to massive equity dilution. Why? Because when you’re "incinerating" cash to build the future, you need a sovereign-sized safety net. 3. The Oracle "Survival" Surge 🚀 This isn't just about OpenAI. This cash flows directly into compute. Oracle and CoreWeave are the primary beneficiaries. This funding ensures OpenAI can pay its hyperscaler partners for years to come. The market is breathing a sigh of relief: Bankruptcy risks for AI infrastructure plays are evaporating. 4. The Credit Default Swap (CDS) Collapse 📉 Before tonight, Oracle’s CDS was at a 16-year high (~156bps). Investors were pricing in serious risk. Now? We expect a short-covering frenzy. The "AI winter" just got hit by a heatwave of Emirati capital. The Bottom Line: The world was waiting for the US to backstop the AI revolution. Instead, Abu Dhabi stepped up. This $100B injection doesn't just fund a chatbot; it stabilizes the entire AI ecosystem for the next 24 months. Is this the start of the 2025 bull run, or just a very expensive bridge to the unknown?

BREAKING 🚨: Oracle

$ORCL has now plunged 48% since its all-time high on September 10, a total market cap loss of $475 Billion 📉📉 Note that the stock is UP 6% after-markets on TikTok deal + OpenAI securing $100B in funding from UAE sovereign wealth fund. Source: Barchart @Barchart

AI CDS levels update

Coreweave in blue Oracle in red Source: www.zerohedge.com

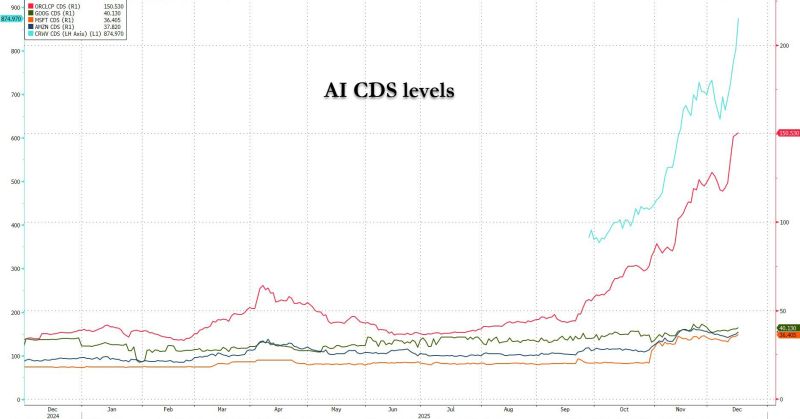

Mind the gap...

The chart from Epoch.ai GPU clusters reveals a 16x surge in Data center power demand. With 94% of infrastructure not yet built and power constraint ahead, hyperscalers and start-up race to control the future of AI. The US might lose the race to AI not because of the access to talents or capital but because of a lack of power capacity. Source: Markets & Mayhem

🚨 AI Power Shift Alert 🚨

Amazon is in talks to invest $10B+ in OpenAI, pushing its valuation north of $500B. But this isn’t just about capital. It’s about control of the AI stack. 🔹 OpenAI would use Amazon Trainium AI chips 🔹 Rent massive AWS data-center capacity 🔹 Deepen infrastructure dependence beyond Microsoft This comes right after OpenAI restructured its relationship with Microsoft, unlocking deals with rival cloud providers. 💡 What’s happening behind the scenes: OpenAI already committed $38B over 7 years to Amazon servers Has $1.5T (!) in long-term infrastructure deals with Nvidia, Oracle, AMD & Broadcom Nvidia alone plans up to $100B in a multi-year partnership 🤔 Investors are uneasy. Many of these deals are circular — suppliers invest in OpenAI, OpenAI buys their hardware, and sometimes takes equity in return. Meanwhile, rivals aren’t standing still: Anthropic has raised ~$26B from Amazon, Google, Microsoft & Nvidia Amazon alone has put $8B into Anthropic since 2023 ⚠️ Key limitation: Amazon still won’t get rights to OpenAI’s most advanced models — Microsoft keeps exclusivity until the early 2030s. 🛒 Bonus twist: Amazon and OpenAI are also discussing e-commerce integrations, as OpenAI expands beyond chat into platforms like Etsy, Shopify & Instacart. 📌 Big takeaway: The AI race is no longer about models. It’s about chips, clouds, capital, and distribution — and Big Tech is locking it all down fast.

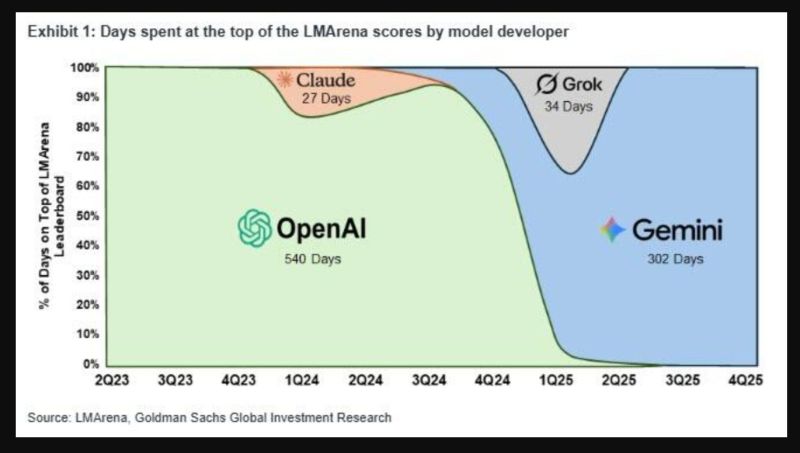

The 'AI Revolution' is still only 2 1/2 years old (if you date it to the release of ChatGTP)

We have experienced swings on which models are gaining the most attention as Goldman's Jim Schneider illustrates the chart below... Source: zerohedge

Everyone is talking about Oracle CDS, but Coreweave $CRWC CDS is the real gem...

Source: RBC, Bloomberg

Investing with intelligence

Our latest research, commentary and market outlooks