Straight from the Desk

Syz the moment

Live feeds, charts, breaking stories, all day long.

- All

- equities

- United States

- Macroeconomics

- Food for Thoughts

- markets

- Central banks

- Fixed Income

- bitcoin

- Asia

- europe

- investing

- geopolitics

- gold

- technical analysis

- Commodities

- Crypto

- AI

- Technology

- nvidia

- ETF

- earnings

- Forex

- china

- Real Estate

- oil

- banking

- Volatility

- energy

- magnificent-7

- apple

- Alternatives

- emerging-markets

- switzerland

- tesla

- United Kingdom

- Middle East

- assetmanagement

- amazon

- microsoft

- russia

- ethereum

- ESG

- meta

- Industrial-production

- bankruptcy

- Healthcare

- Turkey

- Global Markets Outlook

- africa

- Market Outlook

- brics

- performance

💥 Meta is building a $27 BILLION data center in Louisiana…

👉 But none of it shows up on Meta’s balance sheet. How? Meta shifted the entire project into a joint venture: 🔹 Meta owns 20% 🔹 Blue Owl Capital owns 80% 🔹 A holding company (Beignet Investor) issued $27.3B in bonds, mostly bought by Pimco 🔹 Meta will rent the data center starting in 2029 And here’s the kicker: the lease is structured to qualify as an operating lease, not a finance lease — letting Meta avoid listing the giant asset and the massive debt. But peel back the layers and things get messy: 🔥 Meta runs the data center 🔥 Meta carries the risk of cost overruns 🔥 Meta guarantees the full value of the bonds if they don’t renew 🔥 Yet Meta insists it doesn’t “control” the venture enough to count it on the books Even the Wall Street Journal called it “artificial accounting.” 🧩 It’s part of a bigger trend: Tech giants want unlimited AI infrastructure… 🚫 …but they don’t want the debt that comes with it. Morgan Stanley estimates the industry could need $800B in off-balance-sheet financing by 2028. Meta may not be borrowing on paper — but economically, this is debt with extra steps. What do you think: smart financial engineering or a red flag in disguise? Source: Hedgie

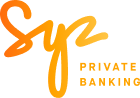

A great post and chart by @AndreasSteno on X: It's not an AI scare. It's an OpenAI scare.

The "Google bets" basket (Alphabet, Broadcom and Celestica) just hit a new ATH while the "Open AI" basket (Nvidia, Softbank & Microsoft) has been hut hard since the end of October. Source: Steno Research, Macrobond, Bloomberg

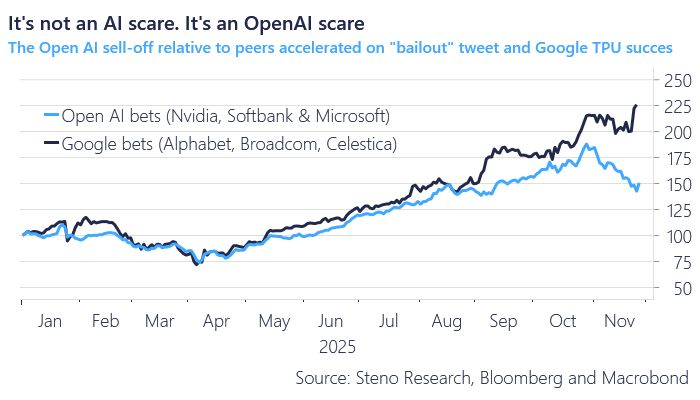

Anthropic unveils Claude Opus 4.5, its most intelligent model to date, co says

It’s meaningfully better at everyday tasks like working with slides and spreadsheets. The new AI tops coding benchmark, leading in key tests like SWE-bench Verified at 80.9%, Terminal-bench 2.0 at 59.3%, and OSWorld at 66.3%, beating models from Google and OpenAI in coding, agent tasks, and computer use. It features a 200K token context window, uses far fewer tokens for the same work, and costs much less at $5 per million input tokens. Developers can now access it through APIs, apps, and platforms like Amazon Bedrock and GitHub Copilot, with engineers noting its strength on complex bugs. Source: CNBC-TV18

TPU > GPU ???

Google's AI chips - TPUs, or tensor processing units - are having a moment. These semiconductors were used to train its latest genAI model, Gemini 3, which has received rave reviews, and are cheaper to use than Nvidia's offerings. 🚀 But here's the real reason Google invented the TPU Back in 2013, Google ran a simple forecast that scared everyone: If every Android user used voice search for just 3 minutes a day, Google would need to double its global data centers. Not because of videos. Not because of storage. But because AI was too expensive to run on normal chips. So Google made a bold move: 👉 Build its own AI chip - the TPU. 15 months later, it was already powering Google Maps, Photos, and Translate… long before the public even knew it existed. ⚡ Why TPUs Matter GPUs are great, but they were built for video games, not AI. TPUs were built only for AI. No extra baggage. No wasted energy. Just raw efficiency and speed. That focus paid off: TPUs deliver better performance per dollar Use less energy Are faster for many AI tasks And with each generation, Google doubles performance Even Nvidia’s CEO, Jensen Huang, openly respects Google’s TPU program. 🤔 Then why don’t more companies use TPUs? Simple: Most engineers grew up with Nvidia + CUDA, and TPUs only run on Google Cloud. Switching ecosystems is hard — even if the tech is better. ☁️ The Bigger Picture: Google’s Cloud Advantage AI is crushing cloud margins because everyone depends on Nvidia. Google isn’t. It owns the chip and the software stack. That means: ✔️ lower costs ✔️ better margins ✔️ faster innovation ✔️ and a defensible advantage competitors can’t easily copy Some experts now say TPUs are as good as or even better than Nvidia’s best chips. 🔥 The Punchline Google didn’t build TPUs to sell chips. It built them to survive its own AI growth. Today, TPUs might be Google Cloud’s biggest competitive weapon for the next decade. And the moment Google fully opens them to the world? The AI infrastructure game changes. Source: zerohedge, uncoveralpha

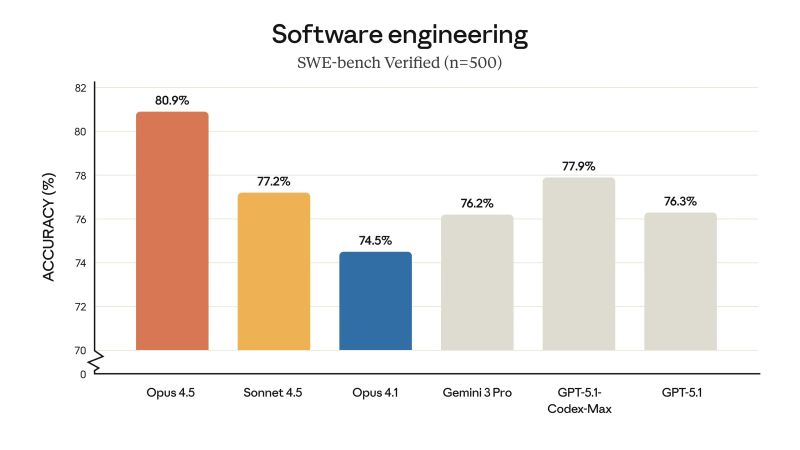

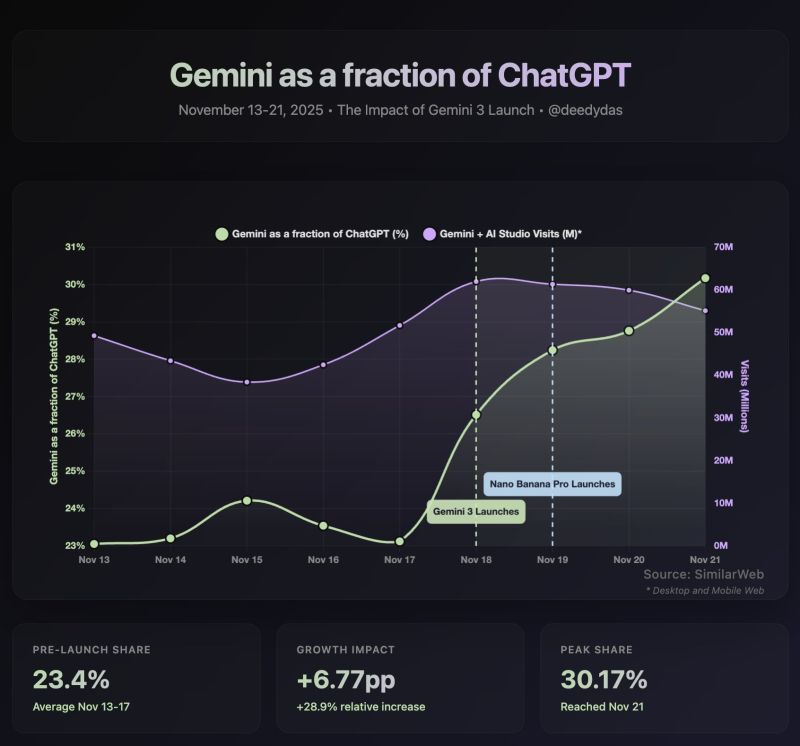

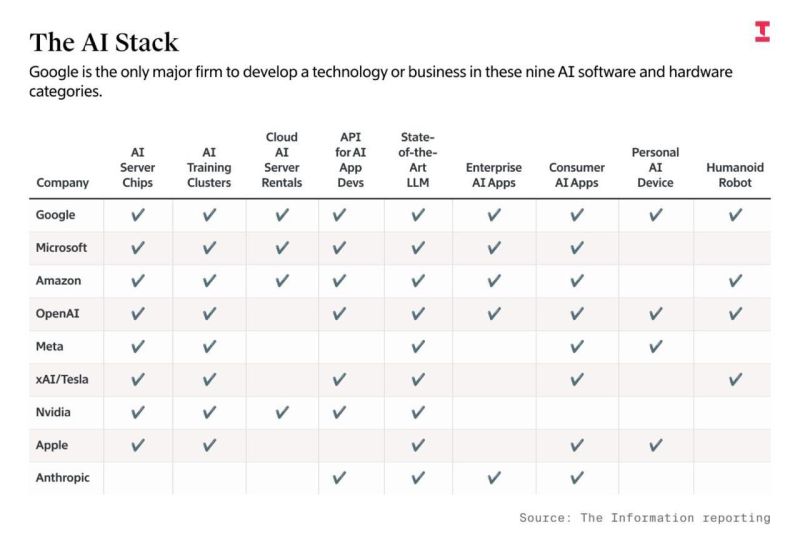

Very interesting chart by Jen Zhu @jenzhuscott showing that Google has the most comprehensive stacks in AI compared to all peers/competitors.

It means they have more defensibility against the incestuous financing games that’s now the core of the “AI bubble”. She also notes that Gemini’s market share has grown rapidly from 5.6% 12 months ago to 13.7% now, mostly at the expense of ChatGPT - this was before the launch of Gemini 3. Source: The information reporting

Investing with intelligence

Our latest research, commentary and market outlooks